Stuttgart Media University

Stuttgart, Germany

What is the difference between:

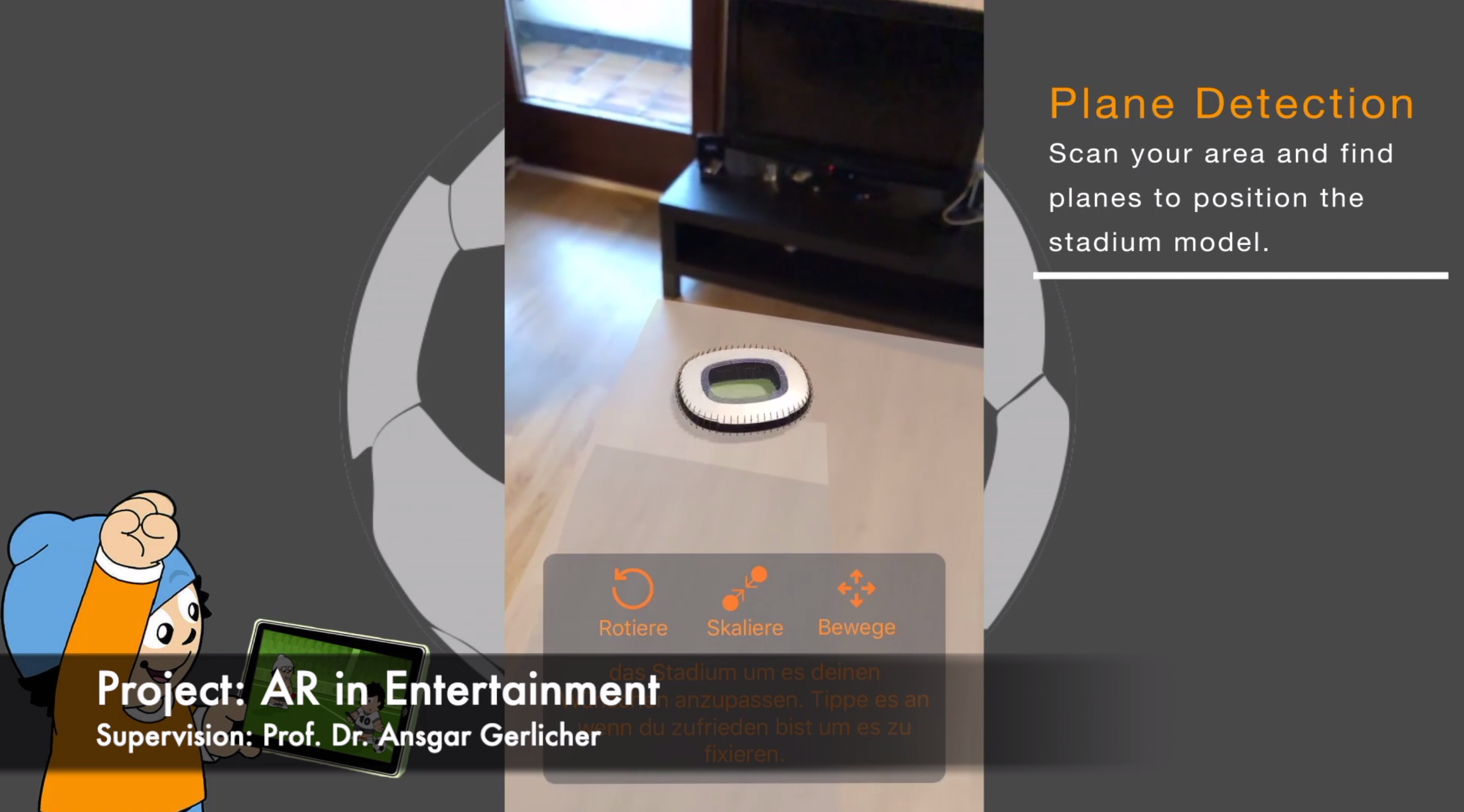

AR in Entertainment - Stuttgart Media University, Semesterproject Winterterm 2017-18

AR in Entertainment - Stuttgart Media University, Semesterproject Winterterm 2017-18

Youtube: masking objects ARKit 3 People Occlusion / Masking Feature

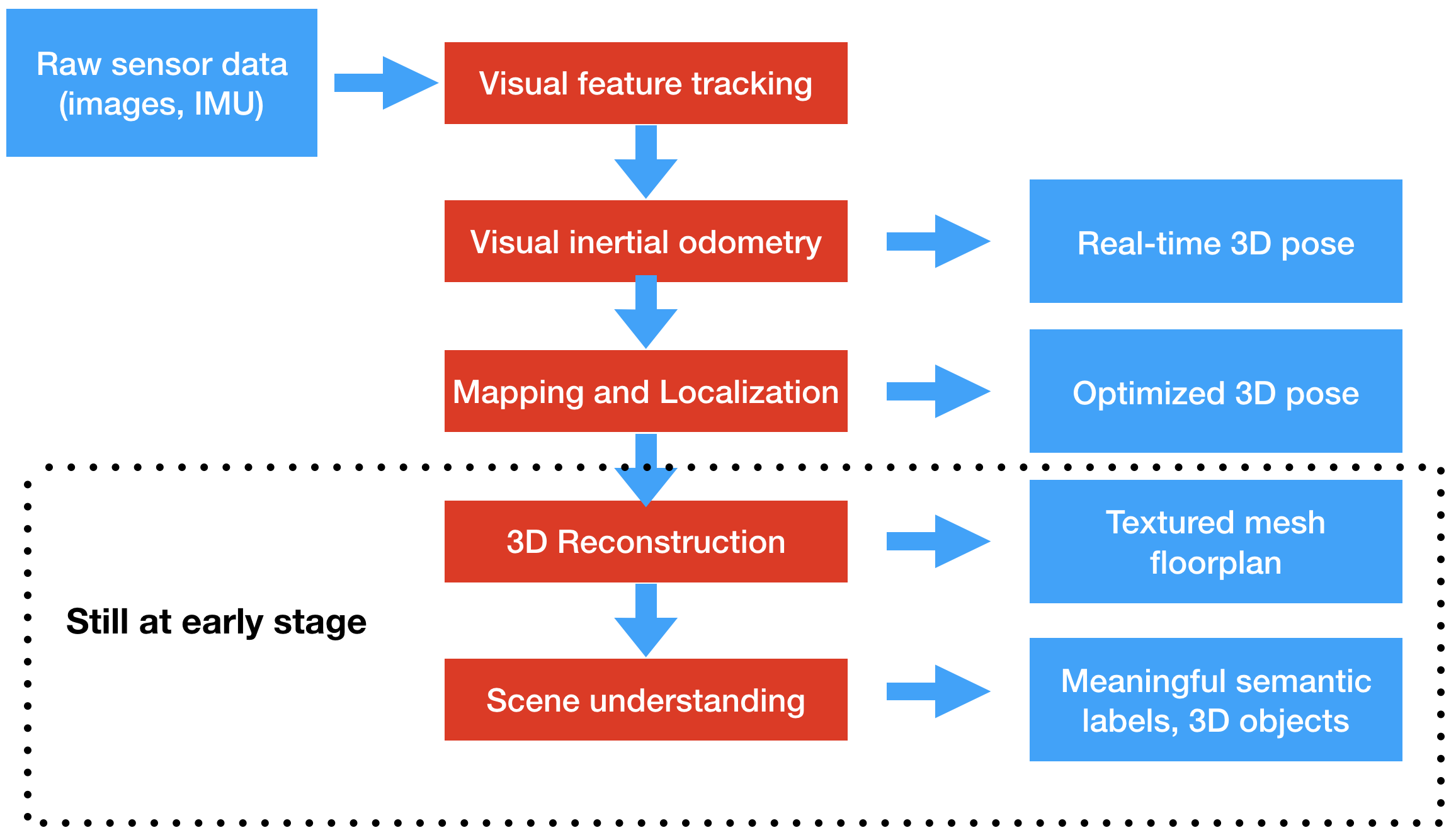

Apple presented the ARKit frameworks since iOS 11 on WWDC 2017. Since September 2018 the new version ARKit 2.0 was released with iOS 12. It provides an API to develop AR applications that allow you to augment the camera view and place virtual 3D object within the real world using your iOS device as a window to see them.

You will learn how to create your own AR app that can:

Let’s jump right in an create a first AR app in Xcode:

The Quick Look API is a very easy way to get a nice AR impression. Let’s try it:

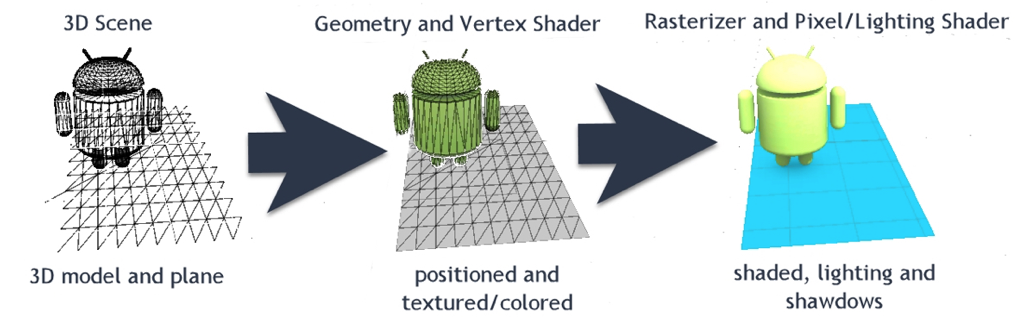

No that we’ve created our own first two AR apps (that was easy right), let’s try to get a better understanding of the most important ARKit classes used:

On the next few slides we will use these classes and protocols to:

In order to detect planes you need to first create an ARSession and configure it using an ARConfiguration object. For the configuration use the ARWorldTrackinConfiguration class and turn on the plane detection as shown here:

let configuration = ARWorldTrackingConfiguration()

configuration.planeDetection = [.horizontal, .vertical]

Next create an ARSCNView (if not already available) and configure it using the configuration object:

let sceneView = ARSCNView()

sceneView.session.run(configuration)

Now your scene view is configured to detect horizontal and vertical planes in the real world.

In order to be notified by ARKit if a plane was detected, your ViewController should implement the following method:

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

}

This method tells the delegate that a SceneKit node corresponding to a new AR anchor has been added to the scene. It is called whenever ARKit detects a new plane. The plane is added as node at the anchor position. The parameters are:

renderer: The class that renders the Scene (usually your SCNView implementing the SCNSceneRenderer protocol)node: The SCNnode that has been added to the scene that you can use to add renderable contentanchor:The ARAnchor that defines the position and pose of the node within the sceneWithin the “renderer” callback method we can use the provided information to visualize the detected planes. For this a SCNPlane class can be used. The following code shows how the SCNPlane class is created based on the information from the ARAnchor:

guard let planeAnchor = anchor as? ARPlaneAnchor else { return }

let extentPlane: SCNPlane = SCNPlane(width: CGFloat(planeAnchor.extent.x), height: CGFloat(planeAnchor.extent.z))

First we cast the ARAnchor to an ARPlaneAnchor in order to get information on it’s extend. Then we create the SCNPlane using the extend as width and height for the new object. A SCNPlane represents a rectangle with controllable width and height. The plane has one visible side.

In order to render a 3D object in the scene we need to create a SCNNode. This can be done as follows:

let extentNode = SCNNode(geometry: extentPlane)

// position the node

extentNode.simdPosition = planeAnchor.center

// `SCNPlane` is vertically oriented in its local coordinate space, so

// rotate it to match the orientation of `ARPlaneAnchor`.

extentNode.eulerAngles.x = -.pi / 2

// Make the visualization semitransparent

extentNode.opacity = 0.3

// add the node to the scene

node.addChildNode(extentNode)

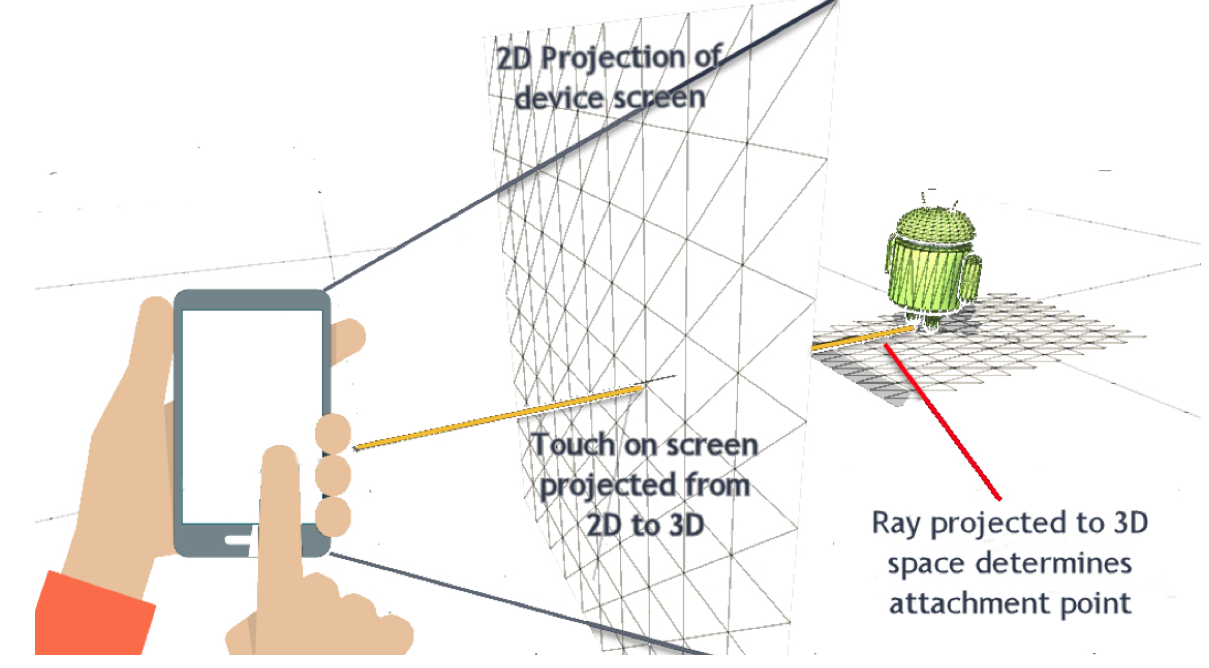

In order to detect touches on a View the touchesBegan() method can be overridden.

To find out which object was touched in the 3D scene, the hitTest() of ARSCNView can be used.

It allows to find a hit with different types of objects in a scene, such as feature points and planes.

The following code shows how an extisting plane can be ‘hit tested’:

override func touchesBegan(_ touches: Set<UITouch>, with event: UIEvent?) {

super.touchesBegan(touches, with: event)

guard let touch = touches.first else { return }

let results : [ARHitTestResult] = self.sceneView.hitTest(touch.location(in: self.sceneView), types: [.existingPlaneUsingExtent])

guard let hitResult = results.last else { return }

// let's show our model in the scene

self.renderSomethingHere(hitFeature: hitResult)

}

The hitTest() method returns an array of ARHitTestResult objects. In the above example it is tested, if the location of a touch on the view would “hit” an existing plane with an extend (a size) in the world context.

To render a node in the 3D scene based on the found hit location, it is necessary

to retrieve the position and orientation of the hit test result relative to the world coordinate system.

This is done by using the property worldTransform.

This is a transform matrix that indicates the intersection point between the detected surface and the ray that created the hit test result. A hit test projects a 2D point in the image or view coordinate system along a ray into the 3D world space and reports results where that line intersects detected surfaces.

In order to translate this into a location within 3D space, x,y,z coordinates are needed. This can be done by using SCNVector3Make as follows:

func renderSomethingHere(hitFeature: ARHitTestResult){

let hitTransform = SCNMatrix4(hitFeature.worldTransform)

// get coordinate for node

let hitPosition = SCNVector3Make(hitTransform.m41,

hitTransform.m42,

hitTransform.m43)

//create a clone of an existing node

let node = self.someSCNNode!.clone()

// set the position within 3D space

node.position = hitPosition

// add the node to the ARSCNScene

self.sceneView.scene.rootNode.addChildNode(node)

}

In the above example a SCNnode is cloned, positioned and then added to the scene as child node. Let’s see how the node is created:

As we’ve learned in the second assignment, it is quite easy to load USDZ files as SCNnodes using Quick Look.

But without Quick Look, we have to do it by using the class SCNReferenceNode.

It though is fairly simple. Here is some example code:

let url = Bundle.main.url(forResource: "Tugboat", withExtension: "usdz")

if let refNode = SCNReferenceNode(url: url!) {

// the file was found so load the model

refNode.load()

// set our boat variable

self.boat = refNode

// scale the boat and make it much smaller

self.boat?.scale.x = 0.05

self.boat?.scale.y = 0.05

self.boat?.scale.z = 0.05

}

That’s it. You now can use the SCNNode and add it to the scene. Not let’s try it out - hands on:

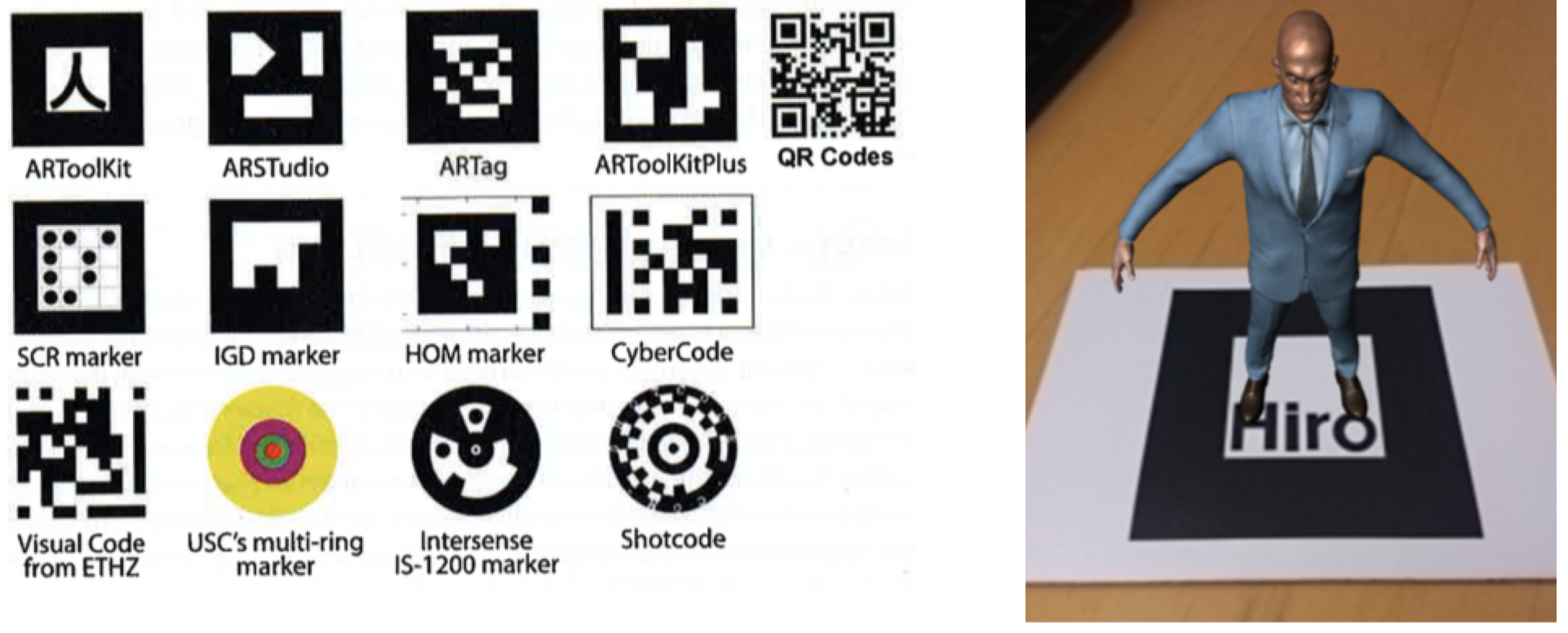

For the detection and tracking of images, ARKit provides basically two classes:

Images and their movements are tracked and objects can be placed relative to the tracked position of those images.

To enable image detection the following steps are necessary:

ARReferenceImage resources from your app’s asset catalog.detectionImages property.run(_:options:) method to run a session with your configuration.The code below shows how to execute these steps when starting or restarting the AR experience

let configuration = ARImageTrackingConfiguration()

guard let trackedImages = ARReferenceImage.referenceImages(inGroupNamed: "Photos", bundle: Bundle.main) else {

print("No images available")

return

}

configuration.trackingImages = trackedImages

configuration.maximumNumberOfTrackedImages = 1

// Run the view's session

sceneView.session.run(configuration)

When one of the reference images is detected, the session automatically adds a corresponding ARImageAnchor to its list of anchors.

Implement for example the renderer(_:didAdd:for:) method for reacting on a image detection as follows:

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

let shipScene = SCNScene(named: "art.scnassets/ship.scn")!

let shipNode = shipScene.rootNode.childNodes.first!

shipNode.eulerAngles.x = -.pi / 2

node.addChildNode(shipNode)

}

To use the detected image as a trigger for AR content, you’ll need to know its position and orientation, its size, and which reference image it is.

The anchor’s inherited transform property provides position and orientation, and its referenceImage property tells you which ARReferenceImage object was detected.

If your AR content depends on the extent of the image in the scene, you can then use the reference image’s physicalSize to set up your content, as shown in the code below.

guard let imageAnchor = anchor as? ARImageAnchor else { return }

let referenceImage = imageAnchor.referenceImage

// Create a plane to visualize the initial position of the detected image.

let plane = SCNPlane(width: referenceImage.physicalSize.width, height: referenceImage.physicalSize.height)

let planeNode = SCNNode(geometry: plane)

planeNode.opacity = 0.25

//rotate the plane to match.

planeNode.eulerAngles.x = -.pi / 2

// Add the plane visualization to the scene.

node.addChildNode(planeNode)

You can provide your own images as a reference. This is done by creating an AR resource group in your Assets and then adding the image files (jpg or png) via drag an drop. The following things should be considered:

Xcode will warn you if the image quality is not good enough, when you add an image.

For each session you should load one resource group. Apple recommends not to use more than 25 images in on session for performance reasons. If you want to use more images in your app that is possible, but you should load another resource group for example dependant on the context or location.

Now you should have enough information to develop your own AR image tracking app. Have fun!

With iOS 12 Apple supports also 3D object detection. In order to detect objects the following steps are necessary

ARWorldTrackingConfigurationARReferenceObjectThe rest is the same as with detecting images. Here is some example code to do the setup:

let configuration = ARWorldTrackingConfiguration()

guard let referenceObjects = ARReferenceObject.referenceObjects(inGroupNamed: "gallery", bundle: nil) else {

fatalError("Missing expected asset catalog resources.")

}

configuration.detectionObjects = referenceObjects

sceneView.session.run(configuration)

When ARKit detects one a reference object, the session automatically adds a corresponding ARObjectAnchor to its list of anchors

Objects are created by using the app provided by Apple here.

But it is also possible to scan objects in your own app.

This is done by using the ARObjectScanningConfiguration class. Here is some example code:

let configuration = ARObjectScanningConfiguration()

configuration.planeDetection = .horizontal

sceneView.session.run(configuration, options: .resetTracking)

After scanning, call createReferenceObject() method and export or use the scanned object.

More information about this process can be found here

Now let’s try scanning and detecting objects with ARKit:

This was all to easy and you are bored? Go on and try out Apples example game…and maybe you can create your own version of it!

Bonus: Assignment 6 - Build, Run and Play around with Apples SwiftShot Game